Way past my bedtime…

I’ll always remember the first time that I watched Stanley Kubrick’s Full Metal Jacket.

I definitely wasn’t 18 years old, which was the required viewing age as specified by the BBFC in the UK. It was late at night on the weekend, and I was sitting in the front room of the Surrey house I grew up in, and my parents had gone to bed.

We’d recently installed satellite television, which was a luxury after experiencing a childhood without it, and as a result I had turned into a square-eyed TV addict. Unable to just go to bed in the fear of missing some quality piece of programming, I was flicking through the channels and stumbled across Bravo, right as the movie began.

I was subsequently transfixed by Gunnery Sergeant Hartman’s opening monologue, played to perfection by R. Lee Ermey, a former marine himself. It was so direct, so cruel, yet so commanding and powerful. The curtains then came down on some classically insulting opening lines.

In the scene that follows, the numerous U.S. Marine recruits have their heads shaved in an identical repeated sequence, like moving-image passport photographs, each losing their individuality as part of a wider, humiliating, basic training program. The movie kept my young self intrigued, hooked and shocked until the closing credits rolled. What a rollercoaster that film was; what a bloody, awful, rollercoaster.

The shame

The picture is divided into two distinct parts: Marine corps training and subsequent deployment of those Marines in Vietnam. Although the latter half, featuring the Battle of Huế, is more bloody and action-packed, it was the first half of the movie that stuck with me most.

The cruelty, humiliation and shame that was the essence of basic training: the bleaching of a colored personality into a compliant soldier was something that I viewed lugubriously, especially as I was beginning to discover my own uniqueness as a teenager. Notably, the collective punishment that “Private Pyle” is subjected to is imprinted on my memory.

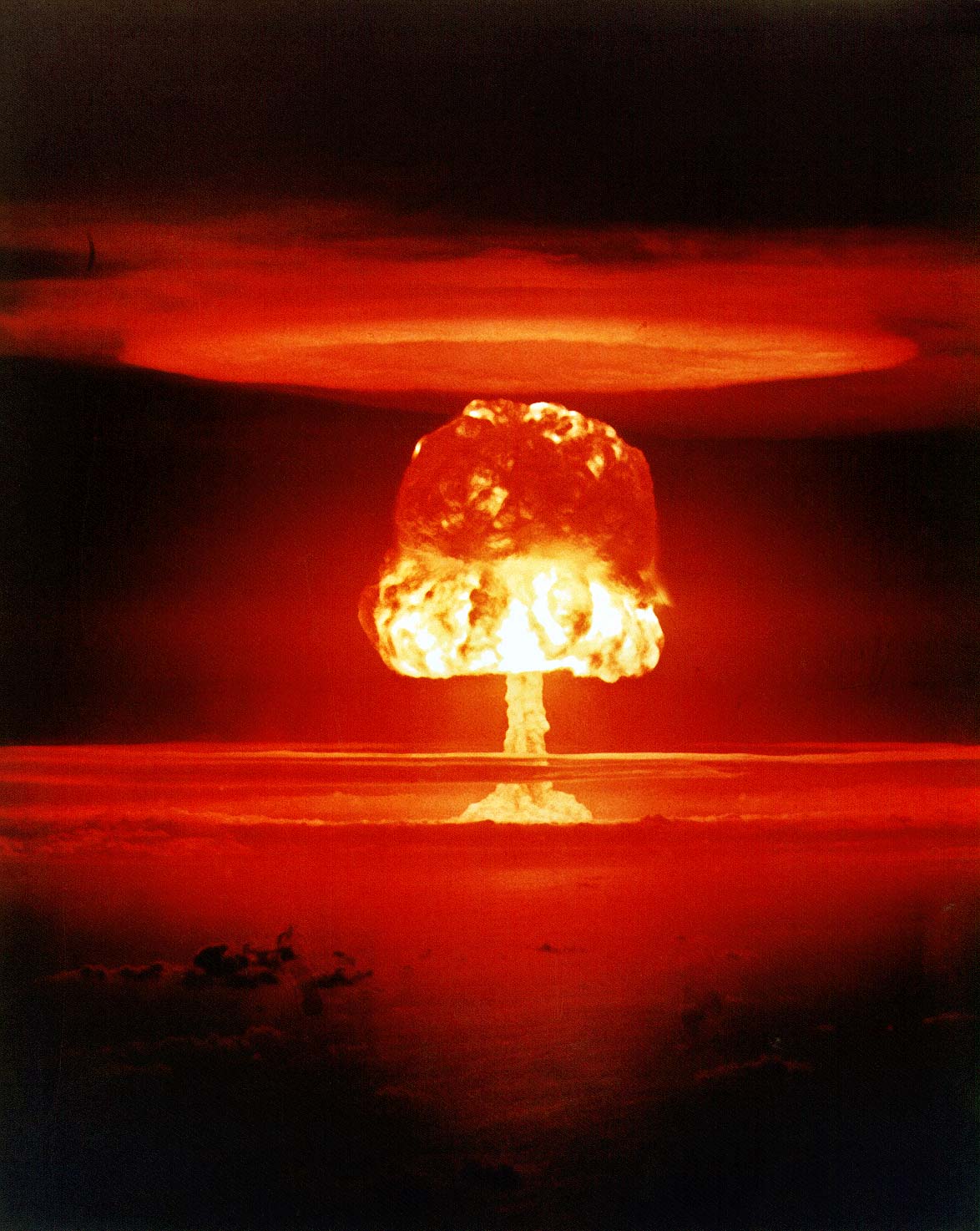

Collective punishment, a tactic employed to force an individual to feel the full force of shame through embarrassment and peer pressure, was deeply unfair. Yet, since the the movie was released in 1987, our culture has – wrongly – embraced shame as a weapon.

Emanating from the public shaming culture of the tabloid media – of which Monica Lewinsky wrote in 2014 in reflection of her affair with Bill Clinton – through to the more recent phenomena of the toxic volatility of public online communities, visible ousting of individuals by a crowd of proverbial pitchfork bearers is prevalent.

But, environments that encourage humiliation, drubbings, or general punishment of failure will become ineffective and will fail themselves.

Why?

Humiliation drives incorrect behavior

I was talking to my manager about a particular project that ran at one of his previous employers.

The project was extremely high profile, involving new hardware, software, and the rollout thereof to millions of customers. As such, it required numerous sizable divisions of the company to come together, working on large, non-trivial pieces of work. The executive met regularly with the leaders of those divisions at a steering group where they would report on progress.

Unfortunately, some individuals in the executive used the steering group as an opportunity to very openly humiliate those that were running behind or facing difficulties.

The dynamic of participants in the meeting was to gradually, nervously, reveal one’s cards. Ideally someone else would have to deliver bad news first. Those that did felt the full wrath of the executive in front of their peers: a highly embarrassing barrage. Those that didn’t reveal their weakness escaped.

Although this tactic was presumably employed in order to drive correct behavior through negative reinforcement, with time, it had quite the opposite effect. It poisoned the culture of the project and made it less effective:

- The executive didn’t respect that large multi-discipline technology deliverables are complex and can, and will, go wrong.

- The participants of the steering group would purposefully hide information, or just lie, to avoid being ousted.

The project would keep slipping, but information that would have been helpful in course-correction would never be heard until it was too late, forcing the whole thing to be re-estimated again after an angry fallout.

An environment that punishes failure with humiliation is unable to intelligently change course in the event of bad news, because that bad news will not get shared for fear of haranguing.

As a result, the group became ever more dysfunctional with time.

It’s OK to fail

We need to make it OK to deliver bad news. When we deliver it to others, we have an opportunity to learn and do better. When bad news is delivered to us, we need to act constructively.

- Be accepting of failure. If something innovative is being worked on then it is by definition full of the unknown. We will fail. That’s OK. If we fail, we’ll try another approach. We should trust that we did our best.

- Attack ideas, and not people. Assuming that good staff are being hired, it’s highly unlikely that people are doing badly on purpose, or just being lazy. Is there something wrong with the idea, or the process, or the strategy? Aiming daggers ad hominem is a lowest common denominator and rarely results in constructive discussion. It misses the opportunity for wider remedy.

- Fully own projects, all the way to the top. Poor leadership lambasts when something is amiss, as if it is nothing to do with them. It is everything to do with them. Good leaders should embrace the failure of part of the organization as their failure also in order drive the right kind of problem solving.

- Focus on solutions, rather than the problems. Energy should be spent distilling the problem to something solvable and then steering the discussion towards how to make it better. Energy focussed elsewhere, such as on blame, castigation and reprimanding is wasted effort.

If you happen to find yourself on the receiving end of humiliating attacks, then be the better person and do not rise to the occasion. Collect your thoughts, come back later and hope that the other party will engage in a more productive discussion. If this is too difficult, then doing so via another means, such as writing your thoughts down via a document or email, can help refocus a difficult situation.

If, however, these situations recur despite your best efforts, then it might be time to make a clean break: you might be too good for the culture of the company you work for.

A company that is not accepting of failure will not innovate in the long term, and you wouldn’t want to work somewhere that isn’t interesting and innovative, would you?