It’s August. You’re having a decent week. Looking over the top of your monitor, you can see that it’s pretty quiet in the office. Many of the engineers are on their summer vacation since their kids are off school, and they’ve picked a perfect moment to do so: the afternoon sky is a deep azure blue and is barely tainted by a single wisp of cirrus cloud.

Still, you don’t mind being in the office while your colleagues are away. You don’t mind at all: you get a rare fortnight where you have very little interruption. You’re finding your flow state, you’re getting lots of programming done, and the icing on the proverbial cake is that a warm convection of summer breeze is passing by your neck. Bliss.

. . .

You see a notification going off on Slack. You switch windows.

It’s the production environment. Uh oh. You minimize your IDE and switch to the Web browser, heading over to the monitoring dashboard. There’s one red dot, which you click. It’s the black box check for the API, which just timed out. That’s weird. You open up a new tab and go to the login page for your application, but it doesn’t load.

Oh dear.

You switch back to the monitoring dashboard. Ten red dots. Three black box checks have failed. The homepage won’t respond. Two of the databases are reported as offline. The Elasticsearch cluster has also become unhealthy with half of your index shards unassigned.

Oh dear, oh dear. Cold sweats.

You grab your colleagues that are sitting near you. You begin diving into logs together, trying to work out what’s going on. One of the customer support agents comes jogging over. The thudding of their footsteps increases in volume as they get near you.

“What’s going on? Tickets are flooding in.”

“We don’t know yet, we need to investigate,” you reply, with one eye on the logs that you’re scrolling through.

“OK, well what do I say?”

“We’re looking into it.”

“When’s it going to be fixed?”

“No idea. We’ve got to work out what’s going on first.”

The phone rings. You pick up. It’s your sales director. She’s not happy.

“In the middle of a pitch here. What’s going on? Shaun has gone back to slides, but if this lasts much longer, we’re going to look really dumb out here.”

“Not sure what’s going on yet, we need to investigate,” you reply, although what you’re really concentrating on is the cacophony of errors appearing in application logs.

“Let me know, be quick. Call me back.”

And the phone call ends with a click.

Back in the logs, you’re trying to see exactly what caused that initial exception to occur. Then you hear the deep thuds again on the office carpet. It gets louder. It’s the CEO, looking really quite cross.

“Did you know the app is down?”

. . .

Chaos is normal

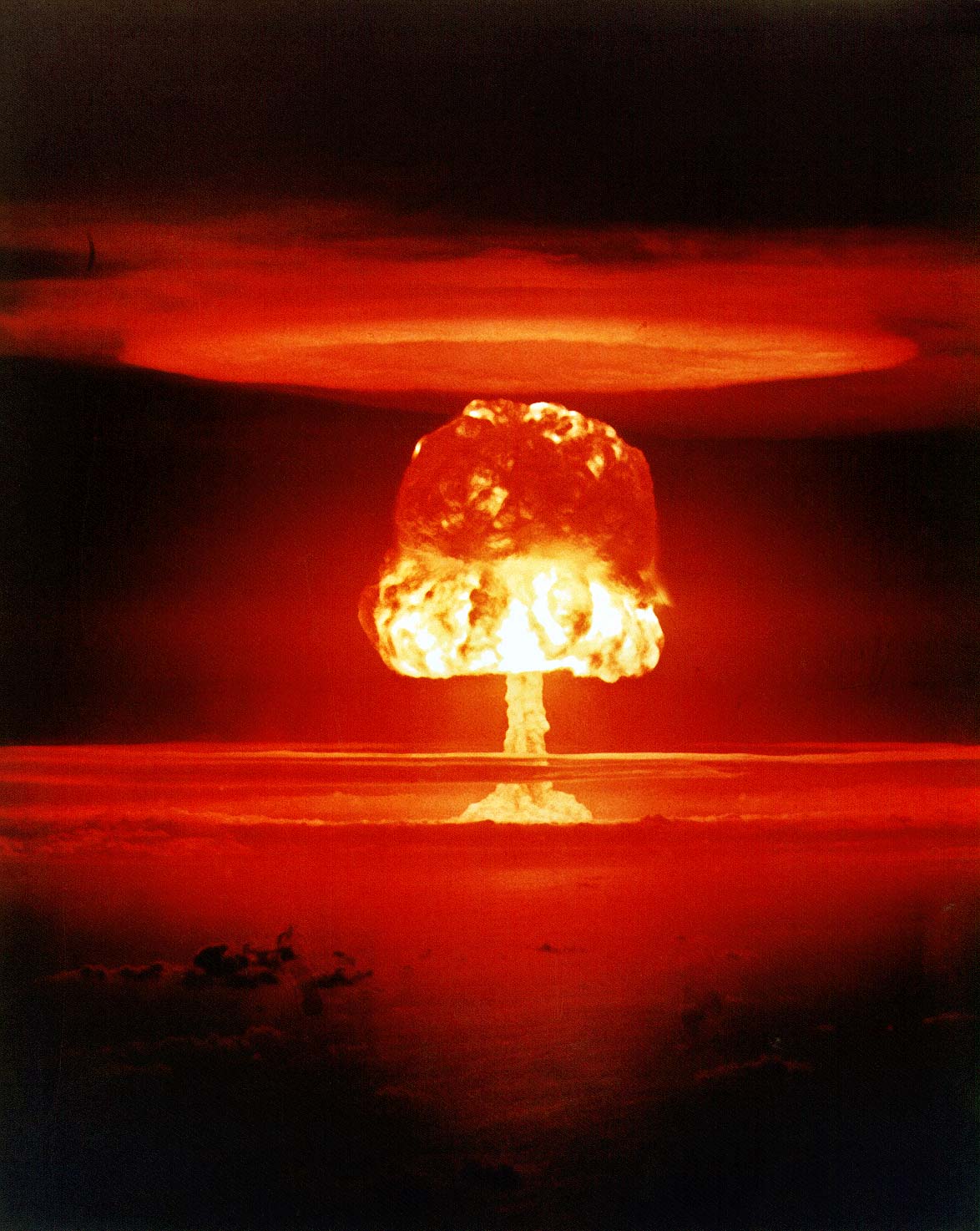

If you are working at a SaaS company then I will make you a bet: something is going to go horribly wrong in production this year. I don’t mean an issue with the UI, or a bug in a feature, I’m talking about something truly nasty like a networking problem at your data center, or a database having corrupt data written to it that then replicates to the failover and backups, or a DDoS attack, you name it: one of many potentially woeful stress-inducing panics.

Yes, all of those have happened to us, and not only once. Many times.

It happens. You can’t differentiate yourself from other companies by never experiencing outages. However, you can put in place some process that maintains some sense of order when everything is on fire and ensures that those that need to fix the issue are able to do so, and those that need to be informed, are.

Outages and unreliable service in SaaS will cost you a lot of money. Not only will repeated incidents cost you money via the salaries of the engineers that are spending their time fixing them rather than improving the product, a slow or unreliable website will cause your customers to lose their patience, open another tab and click on your competitor. When was the last time you waited for a site to load for more than 10 seconds? Your business was lost in these moments.

Defining those moments of panic

To the disorganized or inexperienced, these chaotic moments of panic are truly awful. You don’t know what to do first, you’re overwhelmed, and your unease transmits to those around you, causing a swell, then a breaking wave, of paranoia. However, these moments can feel saner with a simple process wrapped around them to guide you through the mess.

But first, some definitions. The excellent Art of Scalability defines incidents and problems based on definitions from ITIL:

- An incident is any event that reduces the quality of service. This includes downtime, slowness to the user, or an event that gives incorrect responses or data to the user.

- A problem is the unknown cause of one or more incidents, often identified as the result of multiple similar incidents.

Incidents and problems are a massive pain. They are unexpected, unwanted, destroy the productivity of the teams that are working on them, and negatively effect morale. However, they’re always going to happen. You need to be prepared.

Your weapons against these situations are two-fold:

- You need to accept that incidents are going to happen, and have an incident management process that allows you and your staff the space and coordination to restore the required level of service whilst communicating effectively with the rest of the business.

- You need to do your best to track and log incidents so that they don’t turn into problems, or in the case that they do, those problems are kept as small as possible.

Let’s begin by looking at incident management.

Incident management

When things blow up, there’s three hats that need to be worn by the staff that are working on it:

- The manager of the situation, who is able to make decisions, delegate actions to staff, and to be the point of contact.

- The communicator, who is responsible for broadcasting to the business what is going on at regular intervals.

- The technical expert, who is identifying and fixing the issue.

In organizations that are not well-versed in incident management, these three hats often get worn by the same person, making them ineffective at all three strands of work. What works best in practice is to ensure that these hats are worn by at least two people, with the third role of technical expert always being worn uniquely by those that are doing that role. Let those that are working on fixing the problem do nothing but fix it calmly.

The role of the incident manager should be an experienced member of staff who has the authority to – and is comfortable doing so – making difficult calls, such as a decision whether you should keep the service running at reduced speed for 6 hours, or alternatively go offline to a holding page for 30 minutes to perform critical maintenance and resume at full speed afterwards.

The communicator hat can also be worn by the incident manager if they are content and competent at doing so. The communicator should broadcast through the expected channels to the rest of the business what the latest is with the incident, what is being done about it, and when the next update or expected fix is going to be.

Identify people in your organization who are able to fill these roles. Ideally, for each of the roles there should exist multiple staff that can wear each of the hats. Then, you can define a rota to ensure that people take turns, and so that your incident management process isn’t affected too much if numerous people are on vacation.

A playbook for incidents

Define a playbook to follow when an incident occurs. A simple one could look like the following:

- The notification that an incident is taking place, and the assignment of staff into the three roles.

- A decision on the means of communication between those working on the incident. Typically we use Slack for this, but any way is fine as long as it works for you.

- Communication to the rest of the business that an incident is occurring: what it is, what’s being done about it, and what the regularity of the updates are going to be. Typically we give updates every thirty minutes via Slack. Additionally, you should update your customer-facing status page if required, and send out notifications to customers if it is deemed necessary.

- Regular internal communication documenting what is being done to recover from the incident. This includes any major decisions that have been made. This can be used later to review the incident and learn from it.

- The continuation of steps 3-4 until the incident is fixed. When it is fixed, the business should be notified and the work that resolved the issue should be documented.

- Scheduling a 5 Whys postmortem to get to the root of incident and decide on actions to prevent it in the future.

Following a playbook outline such as this ensures that the business is kept up to date while those that are working on fixing it are able to do so uninterrupted.

After an incident

As specified in point 6 above, once an incident is over and the service has been restored, it is useful to run a 5 Whys session. These are well-documented elsewhere on the Web, so I won’t go into detail about how to run one. However, it is important to note that you do not want incidents to turn into problems that go unfixed long term.

Your 5 Whys session should hopefully point to a piece of your infrastructure that needs improving, or a part of your application that needs to scale better.

In order to nip the issue in the bud, you should identify the work that should be done to prevent it from happening again. Create tickets, assign actions and owners. The follow up from an incident is more important than the incident being fixed itself. Ensure that the issues identified are prevented from happening again in the future by improving monitoring, scalability, reliability, or whatever it takes.

Sometimes, depending on the scale of parts of your architecture, it may be worthwhile spending money to get an external expert in to guide your path towards scalability. We have done so at various points for our main data stores. Although expensive on paper in the short-term, the reduction in incidents, problems and increase in availability of our application has kept our customers loyal.

In summary

Don’t just treat incidents as annoying things getting in the way of doing your real work. Take them seriously and do the work to make them happen less in the future; you don’t want them to turn into problems that drive your customers away.

2 Comments